In part 1 we looked at an overview of motion capture (MoCap). You will recall that motion capture can be broadly defined as the process/processes of recording movement digitally via technology. As we conclude our special feature, let’s take a deeper look at how it actually works.

The three systems of motion capture

There are three major systems of MoCap:

- Optical

- Mechanical

- Electromagnetic (magnetic)

Optical System

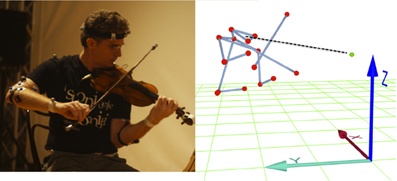

Violinist with optical MoCap system and data visualization

In the optical system, the “performer” traditionally wears marker dots and image sensors triangulate the three-dimensional information of the subject using one or more cameras calibrated to provide overlapping projections. The markers are either reflective or infra-red emitting. More recent systems are able to generate accurate data without the need to wear special equipment for tracking. These “markerless” systems use special computer algorithms that allow the system to dynamically analyze multiple streams of optical input and track the surface features of human forms.

Although optical systems were developed primarily for sports and medicine, they have also had applications in music (such as for conducting).

- Benefits

- Very clean and detailed data

- Cable-less setup; allows performer more freedom of movement

- Large data capture area: can track multiple subjects and more complex performances

- High sampling rates

- High data capture volume

- Drawbacks

- Extremely high costs ($150K-$250K)

- Prone to interference from light, reflections, or physical objects

- Marker occlusion can interfere with data collection (can be compensated for with software which estimates the position of a missing dot)

- Originally not real-time due to post-processing of data; advances have made real-time optical tracking possible, though there is greater chance for latency when compared to mechanical and electromagnetic systems

Electromagnetic System

‘Hand MoCap system’ using the Polhemus LIBERTY™ 16 electromagnetic tracker

In the electromagnetic system, performers don an array of magnetic receivers. These calculate position and orientation via the relative magnetic flux of three orthogonal coils on the receivers that the performer wears and a static magnetic transmitter. The relative intensity of the voltage or current of the three coils allows these systems to calculate both range and orientation by meticulously mapping the tracking volume.

One of the first uses of electromagnetic motion capture was for the military, to track head movements of pilots. Often this method is layered with animation from other input devices.

- Benefits

- Real-time tracking

- Can capture data with only a fraction of the markers compared to optical

- More absolute data: positions/rotations are measured absolutely, orientation in space can be determined

- Not occluded by nonmetallic objects

- Relatively cheaper than optical

- Drawbacks

- Data is noisy (less clean) compared to optical

- Prone to magnetic and electrical interference (rebar, wiring, lights, cables, etc.)

- Restricted data capture area: performers wear cables connecting them to a computer, which limits their freedom of motion

- Significantly lower sampling rate and data capture volume compared to optical

Mechanical System

Mechanical MoCap skeleton system

The mechanical system involves a performer wearing a “skeleton,” a human-shaped set of metal/plastic rods and pieces with sensors that frame the body. As the performer moves, the exoskeleton and its articulated mechanical parts moves with him or her. The sensors are able to detect and record the performer’s relative motion, translating physical actions into digital data. Mechanical motion capture may also involve the use of gloves, mechanical arms, or other tools for key framing (defining the beginning and ending points in a smooth transition of animation).

- Benefits

- Real-time tracking

- Relatively low-cost (can be the cheapest option and even at its most expensive still much less than optical and competitive with electromagnetic)

- No interference from light or magnetic fields

- Some suits can provide limited force feedback or haptic input

- Drawbacks

- No awareness of ground (elevation data such as jumping cannot be measured)

- Data gathered from the feet tends to be somewhat inaccurate (because of sliding motions)

- Equipment needs to be calibrated often

- No data on body orientation (which way the body is facing) without additional sensor(s)

- Absolute positions are not known; they must be calculated from rotations

- Skeleton can be restrictive and result in “unnatural motions”

Optical motion capture in classical music

Because it allows for the greatest freedom, classical music tends to employ the optical system for MoCap, especially when factoring in the advances in real-time tracking (which previously gave both magnetic and mechanical systems an major edge). Dr. Erwin Schoonderwaldt’s research into motor learning processes and the McGill piano keystroke study (both mentioned in part 1) utilize optical motion capture systems. Below is more detailed information about the McGill study and setup:

A passive motion capture system (Vicon 460 by Vicon, Los Angeles, CA, USA) equipped with six infrared cameras (MCam2) tracked the movements of 4-mm reflective markers at a sampling rate of 250 frames/s. Fifteen markers on the piano keys monitored the motion of the keys and were used to determine the plane of the keyboard, shown in Figure 1. Another 25 markers were glued on pianists’ finger joints, hand, and wrist to track the pianists’ movements during the performances. The exact marker labels and placement on the hands is shown in Figure 2.

Another study uses MoCap to examine the perception and physical reactions involved in making and experiencing music. The expression “music-related actions” is used in this research to refer to chunks of combined sound and body motion, typically in the duration range of approximately 0.5 to 5 seconds. MoCap quantifies these “chunk” actions, making them available for study. They are currently being assembled into a database to categorize different kinds of reactions and inform our understanding of music performance and perception.

Motion capture even extends to the composition and performance of music. For example, Transformation – an improvisation piece for electric violin and live electronics – is based on the idea of letting the performer control a large collection of sound fragments while moving around on stage. The piece utilizes a video-based motion tracking system in conjunction with the playback of sounds using “concatenative synthesis,” a technique for synthesizing sounds by chaining together short samples of recorded sound.

Crossover to medicine… and future implications for MoCap

Sometimes, projects that start out in one field end up crossing over to another and having a much bigger impact. That was the case for Alexander Refsum Jensenius, head of the Department of Musicology at the University of Oslo. Several years ago, Jensenius developed a video analysis system for measuring how people move to music. The original plan was to utilize this system during concerts to study movements in both musicians and the general audience to learn more about how people perceive music and how music affects them.

However, this tool caught the eye of researchers in other fields, such as neuroscientist Terje Sagvolden. He believed that the system could be used in his research on ADHD through trials with rats to visualize and systematize the movements of the rats over time. This was an unlikely, yet successful collaboration.

Now, Jensenius’ system is being used in the research of cerebral palsy (CP) in premature babies. Lars Adde, a physiotherapist at the Norwegian University of Science and Technology, reached out to Jensenius because he wanted to develop a pilot of how video analysis could map the babies’ movements and highlight potential risk factors of CP.

At the moment, the system is being tested in several large hospitals in Norway, as well as in Chicago, South India and China. The goal is to turn the video analysis system into a commonly used tool in all hospitals. To date, it has been tested on a little less than 1000 children. “The system works approximately as follows: a camera is attached to a suitcase with a mattress in it, upon which the child is placed. The child is then filmed for some minutes, and the video file is subsequently analysed by a computer. The screen then displays graphs, curves and figures that describe the child’s movements.”

Jensenius himself says he “never would have guessed that the video analysis system could be used in this way.” This just underscores the potential for motion capture technology to play a role in the most unexpected of ways. It can teach us much more about things in classical music that may have been happening for hundreds of years. And, it can drive real innovation that leaves a lasting impact far beyond the concert hall.

ブランドコピーブランド専門店おすすめ!

信用第一、良い品質、低価格は

当社の商品は絶対の自信が御座います。

ブランドコピーベルト

ブランドコピー財布

ブランドコピーサングラス偽物

◆ 当社今日 マフラー面な更新!

●在庫情報随時更新!(*^-^*)

S/AAA品質 シリアル付きも有り付属品完備!

全物品運賃無料(日本全国)